After shaking off my excitement about the Swift programming language this morning I awoke to an innocent, bewildered tweet from Farhad Manjoo at the New York Times, who wondered aloud why anyone would need another programming language.

I would love to read a somewhat but not extremely technical article on this question: Why are we still seeing new programming languages?

- Farhad Manjoo (@fmanjoo) June 3, 2014

Indeed, there are computers in nearly everything these days; doesn't the world have enough computer languages already? The short answer is: No. Here's a dead-simple, Luddite-friendly explanation of why we'll keep seeing new languages as long as there are people to learn them. (Hat-tip to our very own Node.js ninja Chris McClellan for discussing this post with me.)

To Teach Programming

If this question can be framed as a chicken-and-egg problem, we'll start with the egg. The origin of many languages (such as BASIC, my first language) begins with the impetus to make programming concepts easier for beginners and hobbyists and human beings in general to grasp. The language computers actually "think" in is binary code. The reason for this is simple: If you break all your instructions to the machine into "yes" or "no" questions, represented by 1 or 0, then it's impossible for the computer to mess up (unless of course your code is wrong.) This brilliant innovation came from a guy named Claude Shannon, who realized after WWII that radio signals (which fade in and out) were terribly unreliable vector for important information, like the kind you might send to be stored or processed by a computer.

Pretty much every language designed since binary has existed to make it easier for humans to write reliable, human-readable instructions for a machine, without anyone clawing their eyes out from the boredom and redundancy of writing in binary. (Writing the letter "A" for example, in binary code, is 01000001. A lower-case "a" is 01100001. So writing a whole word this way would take a while, never mind the thousands of lines that make up most programs.)

When a language uses a lot of plain English words, making it easily readable, it's known as "verbose." Objective-C, Apple's programming language, is infamously (even absurdly) verbose, which is part of the reason why Apple got its reputation for being user-friendly. It's not just the computers that are easy to use. It's the language itself. This is dummy code, but a function in Objective-C looks like this. Even if you've never programmed in your life, you can sort-of, kind-of, understand what the engineer is telling the computer to do:

[someInstance doSomethingWithObject:a andAnotherParam:b];

In fact, the reason there are so many "hacker kits" out there for physical electronics is that making real-life gadgets is often the most palatable way into programming concepts for people who are turned off just staring at code like this. So if the above sounds boring, that doesn't mean programming isn't for you; just try starting with hardware instead of software.

The Ego Copy

Like other innovations, languages often begin as someone stubborn person's attempt to do things their own way, aka "The Best Way." Here's an example from the early days.

In 1971, most people were programming in Lisp. An engineer at Stanford had figured out how to edit Lisp code in what today we would call a primitive text editor--think of the TextEdit app on your Mac. But it wasn't exactly straightforward to use.

One could not place characters directly into a document by typing them ... but rather one had enter a character in the TECO command language telling it to switch to input mode, enter the required characters, during which time the edited text was not displayed on the screen.

Then you had to hit the Escape key to see your changes made, almost like saving and viewing the draft of a blog post. Anyway, it was a pain.

Another engineer from MIT saw this innovation on a visit to Palo Alto and decided to reproduce it himself back in Cambridge, but with a few improvements. Colleagues eventually began writing "macros" for this new interface--reusable bits of code that saved time. When they formalized the collection of macros into a system, they called it EMACS, short for "Editing MACroS."

"But that doesn't sound like a new language!" you might be saying. True, but like spoken languages, programming languages are often derived from a neighboring language and then touted as "a new language" when it is sufficiently different from the original one that its proponents can make that claim. The story of EMACS brings us to the next reason why programming languages get invented: They save work.

Abstraction

If you're a liberal arts type, don't get scared by this term--it means the same thing in programming as, you know, life. Think of it this way: In writing, we call a group of words a "paragraph." That's an abstract concept which refers to a thought, or a chunk of a written statement. We employ the word "paragraph" so that we don't have to recite the entire text of that paragraph when we tell people which section of the article we are referring to.

Similarly, programmers are always trying to create reusable bits of code, because like other humans, they are lazy, and they seek to not repeat themselves. About 30 years ago, there was a big movement toward making computer programs almost entirely out of reusable parts, which programmers called "objects." (It's kind of like the modular movement in architecture, car, or furniture design.) This is a design pattern known as object-oriented programming, and it was so innovative at the time that NeXT, Steve Jobs's company after Apple, championed it as the future of programming. They were so sold on object-oriented programming that they decided to put it in the name of their version of the C language, and Objective-C was born. Apple inherited Objective-C when it bought NeXT, and turned the company's OS into Mac OS X.

Swift, Apple's new programming language, is simply another layer of abstraction built on top of Objective-C, made so that web developers can write in a style that is preferable to them. But when the program runs, Swift still compiles into good old-fashioned C code. Lots of languages are like this--meant to simply accommodate the style of the writer. CoffeeScript is an example of JavaScript written in a different style, but when the computer compiles and runs the program, CoffeeScript gets turned into JavaScript all the same. (Here's a more detailed rundown of what makes Swift different from Objective-C.)

New Infrastructure

Sometimes you need a new language because there are tectonic changes that need adapting to. This has been happening lately with the coming of the "cloud."

Traditionally, programs have run locally on your computer. These are today known as the "apps" you download and install on your computer. Every once in a while, something called "the Internet" comes along which makes it possible to deliver apps running on one computer to another computer, far away. These are web apps, such as Facebook.com or Twitter.com. They're "applications" in the sense that they are not just static information pages like, say, the documents the DMV posts on their site. In a web app, you can take actions and see those changes reflected (seemingly) instantly, even though the actual Facebook.com "app" is running on servers somewhere, not your actual computer.

Building apps this way is actually quite expensive: You use up a lot of computing power and bandwidth delivering "software as a service," aka "from the cloud," as it's called. Those clouds cost a lot of money to operate, mostly to air condition the servers. So recently a bunch of geniuses got together and created an open source platform called Node.js, which makes it drastically cheaper to run apps over the web for reasons I won't go into here.

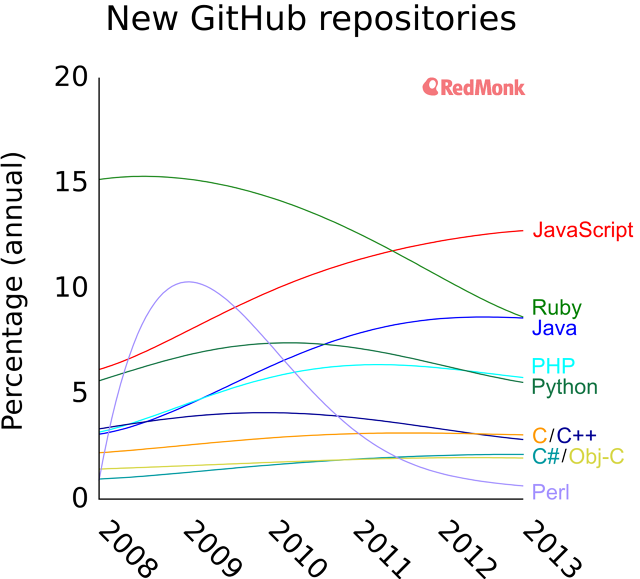

Many developers would already prefer to have their apps running as services, because a website has lots of advantages over a downloadable app, such as: nothing to download! Making web apps cheaper to run at scale has caused an explosion in popularity for Node.js, which is written in JavaScript. Node (and and a few other popular libraries like Meteor.js and Angular.js) explain why JavaScript as a language has exploded in popularity recently.

This isn't to say that the most popular languages totally dominate. Other languages like Erlang have gotten renewed interest since apps started moving into the cloud, too; in fact, the far-less-popular language Erlang is what powers WhatsApp.

Culture

Programming languages are made by people for other people. As a result, they carry all the cultural artifacts of their makers, and some of those cultural artifacts are turnoffs to other groups of engineers, who turn around and make their own version. This has happened countless times in the history of computer science, leading to lots of dialects of the most popular languages. But nowhere is it more starkly apparent than in the first Arabic programming language. As we wrote last year about Ramsey Nasser, who also created the first Emoji programming language:

Nasser commented that even in computer coding, "the tools we use carry cultural assumptions from the people that made them." When Nasser created قلب, he ran into trouble when he tried to translate the words "true" and "false" into Arabic. He ended up using "correct" and "incorrect" instead, and though the concepts did not exactly align, he said it "turned into an amazing conversation that [he] got to have with [his] parents and friends." Nasser aims at creating universality in coding: "Emojinal is an attempt to step away from cultural baggage."

This is another way of saying that old adage: The language you speak changes the way you think. Some languages are good for some types of thinking; others are better for others. As we wrote in April:

"Not only are languages different tools for different jobs, but they are technologies that shape how you think about programming," says Richard Pattis, a senior lecturer of Informatics at UC Irvine who invented the Karel educational programming language in 1981...To expand their minds, Pattis recommends that versatile programmers learn languages from different language paradigms, whether it be object-oriented languages (e.g., C++/Java), functional languages (e.g., ML and Haskell), scripting languages (e.g., Lisp and Python), logic-based languages (e.g., Prolog), or low-level languages (like C, the Java Virtual Machine or a machine language).The point is not necessarily fluency, but gaining a conceptual vocabulary to attack problems in new ways. Good programmers don't just learn how to code--learning core concepts teaches them how to wrap their brain around a problem and produce efficient code to solve it.

Programmers--if you can think of other reasons why languages rise (or fall) please let me know on Twitter @chrisdannen.

[GitHub language popularity graphic courtesy of RedMonk]